Whenever the Tech team was in between projects at Second Story, we spent time in our workshop space, affectionately called “The Lab”. This was our time to experiment with new technologies and novel uses of tried & true technologies, or to create small-scale capability-showcase experiences in collaboration with one of our excellent designers. I experimented with technologies such as Tanvas, BrightSign, WebGL, RFID, and Nanoleaf. Read about one of my favorite Lab projects below.

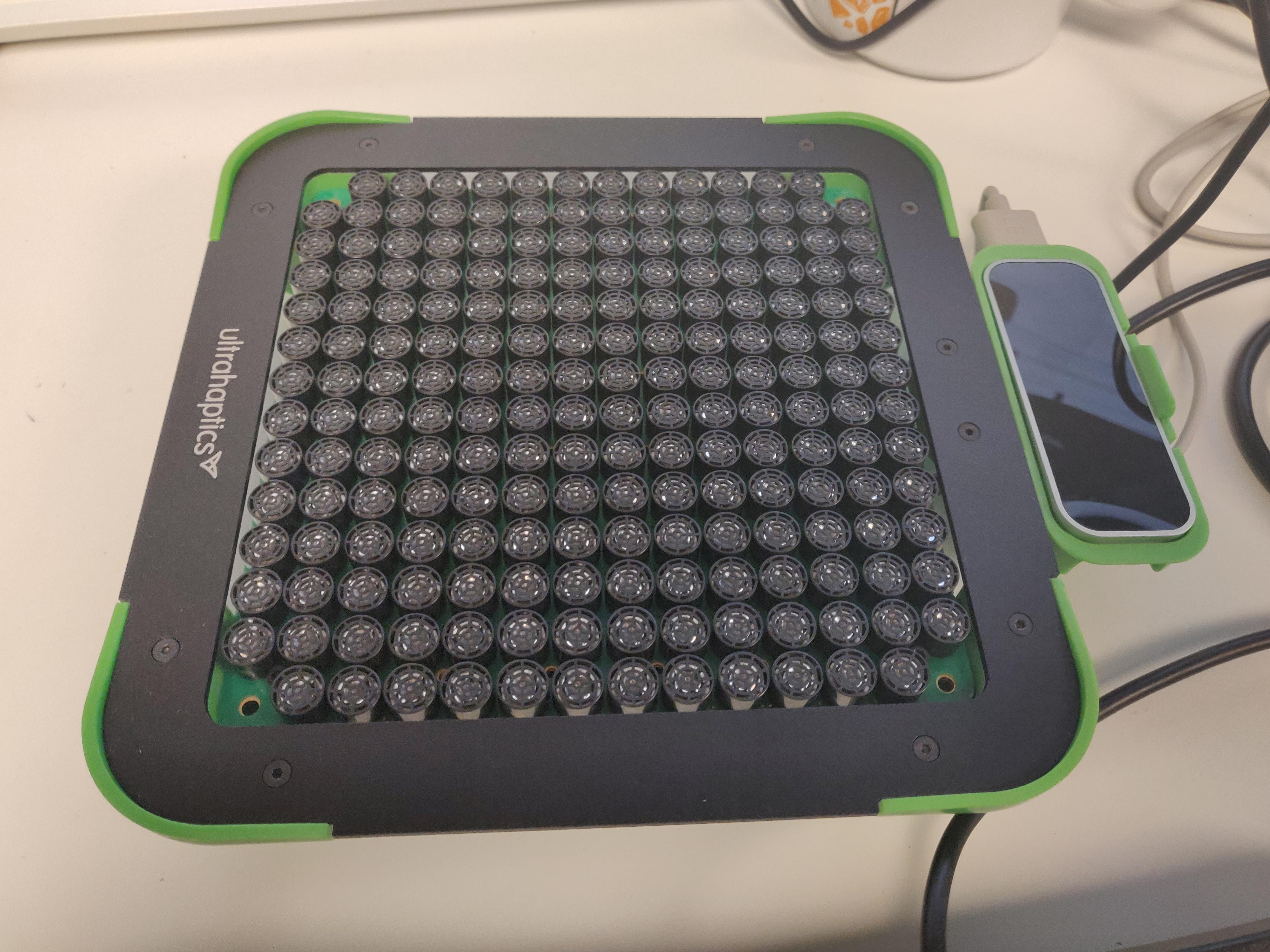

Second Story received an early release of Ultraleap(then Ultrahaptics)’s Stratos device, which leveraged the Leap Motion’s hand tracking in combination with an array of ultrasonic speakers to create targeted haptic feedback on a user’s hand. I was tasked with building a quick, 1-week experience showcasing the capabilities of the device.

Our team brainstormed ideas such as using the Stratos for haptic feedback for interaction with a projected image, creating touches that convey different emotions, and creating a physical object or shape with the Stratos that the user can interact with using their hands. I spent some time playing with the device’s different settings and testing its features, and used what I learned to choose an idea from our brainstorm. I found that the haptics the Stratos produced were not high enough in resolution to replicate the feel of a recognizable physical object - the feeling was more ambiguous and amorphous and not that of a solid shape. I chose the “interaction with a projection” idea: it would be a simple interaction with an animated blob crawling across the user’s hand and into a hole. I went through a couple of prototype iterations for an 80-20 structure to orient the hardware components and landed on the structure below.

A talented designer on our team, Mauricio Talero, created the blob animation with Notch and exported a video, and I used Unity to build a quick interactive which would trigger this animation when the Leap Motion sensed a hand. This was the final result!